Getting Started - Execute Postman Collection

Follow this guide for a basic example of running a test that replays a Postman Collection.

Creating a Postman Collection

To create Postman collection follow steps on Postman Collection Documentation.

Setup a Testable test

Start by signing up and creating a new test case using the Create Test button on the dashboard.

Enter the test case name (e.g. Postman Demo) and press Next.

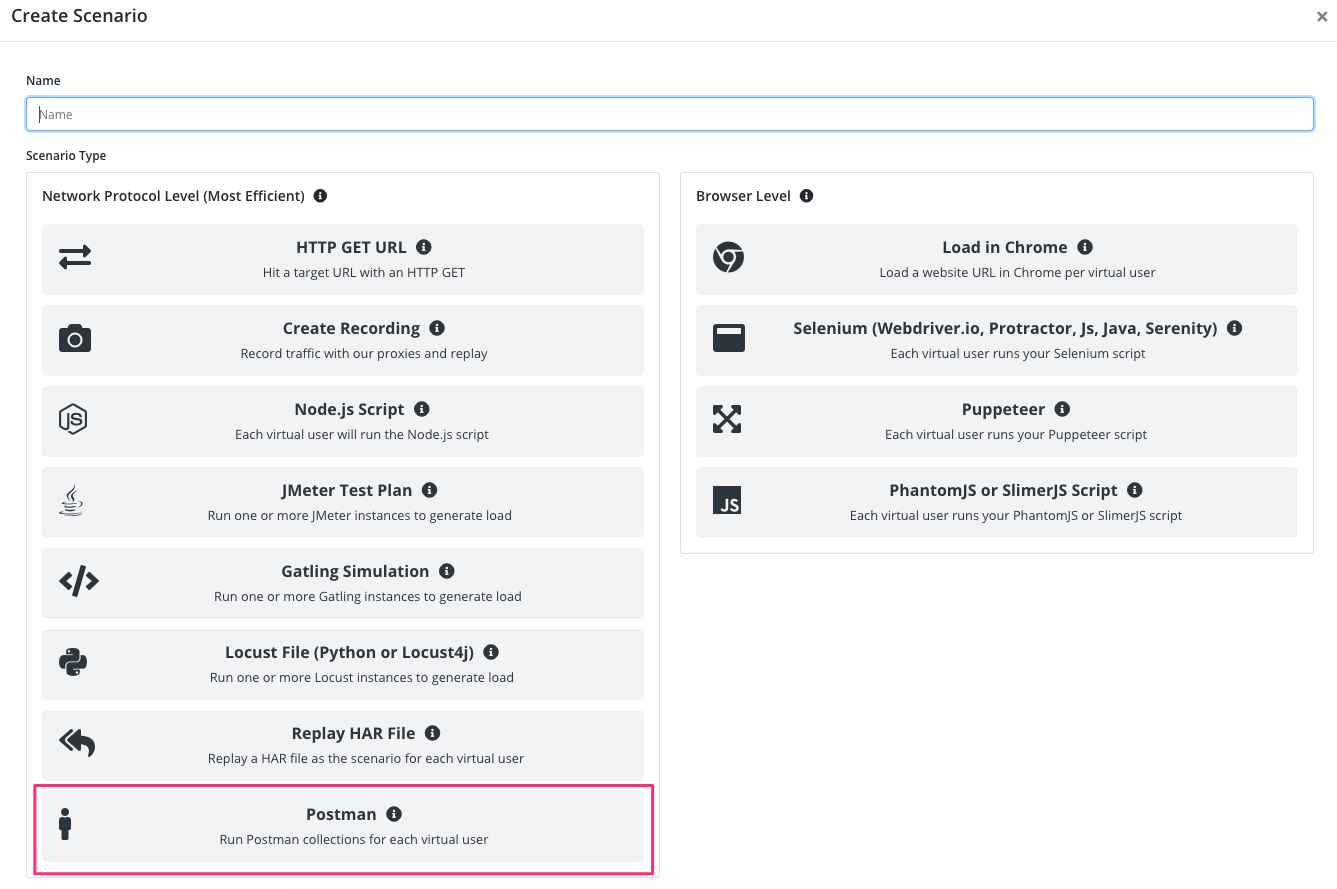

Scenario

Select Postman as the scenario type.

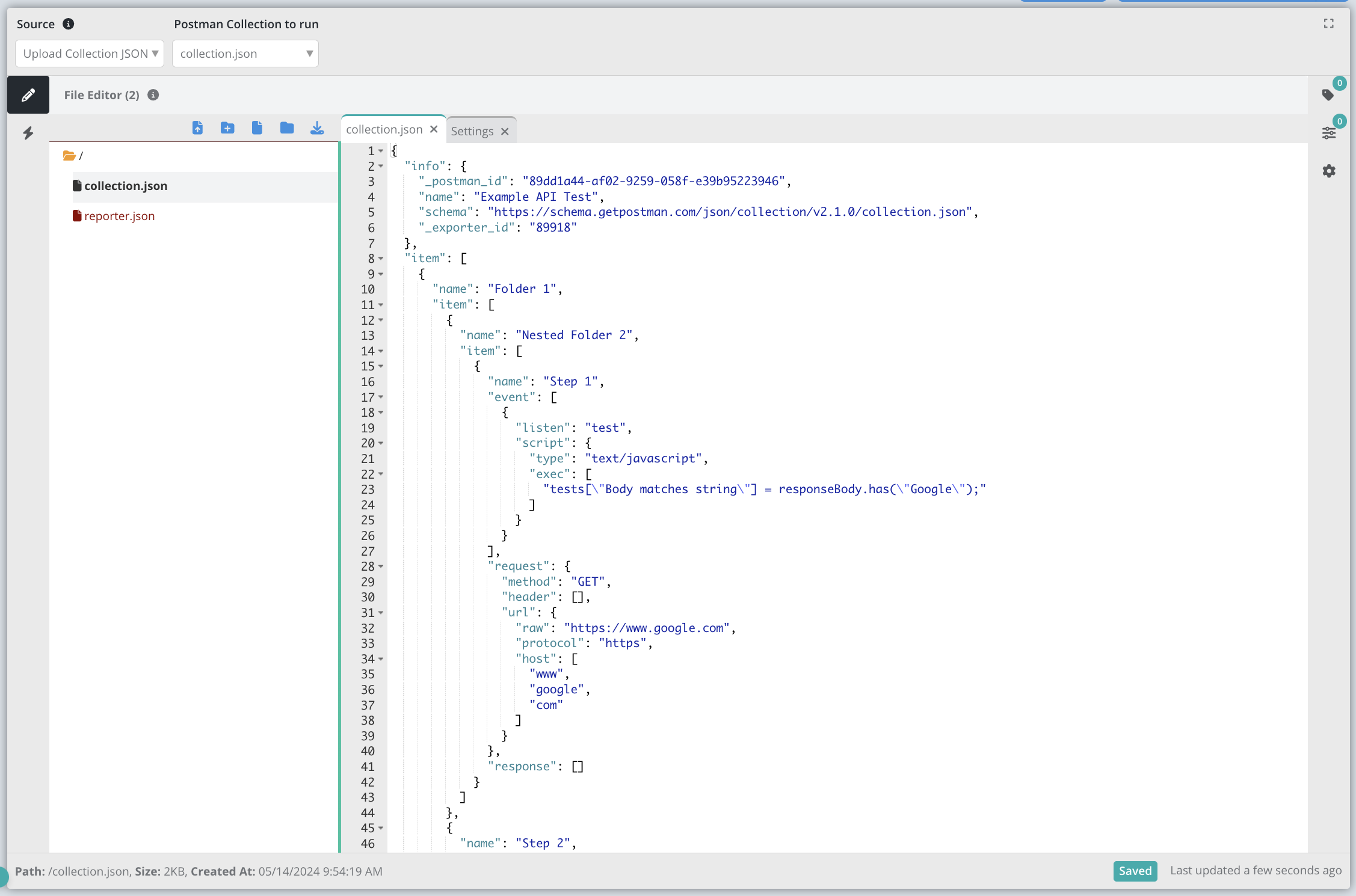

Then select Source = Upload Collection JSON.

Next press the Add Collection JSON button to upload your collection json file.

Each virtual user will run the Postman Collection.

To try it out before configuring a load test click the Smoke Test button in the upper right and watch Testable execute the scenario 1 time as 1 user.

Click on the Configuration tab or press the Next button at the bottom to move to the next step.

Configuration

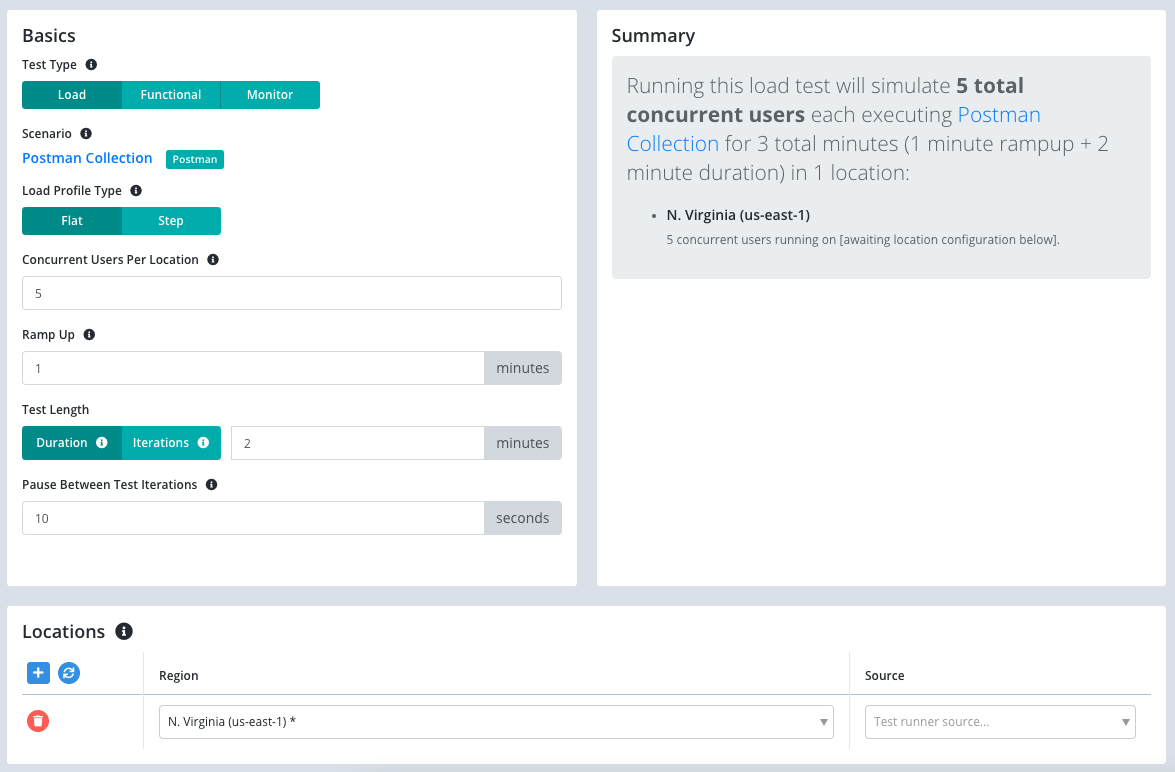

Now that we have the scenario for our test case we need to define a few parameters before we can execute our test:

- Test Type: We select Load so that we can simulate multiple users as part of our test.

- Total Virtual Users: Number of users that will execute in parallel. Each user will replay the Postman collection.

- Test Length: Select Iterations to have each client execute the scenario a set number of times regardless of how long it takes. Choose Duration if you want each client to continue executing the scenario for a set amount of time (in minutes).

- Location(s): Choose the location in which to run your test and the test runner source that indicates which test runners to use in that location to run the load test (e.g. on the public shared grid).

Press Start Test and watch the results start to flow in. See the new configuration guide for full details of all configuration options.

For the sake of this example, let’s use the following parameters:

View Results

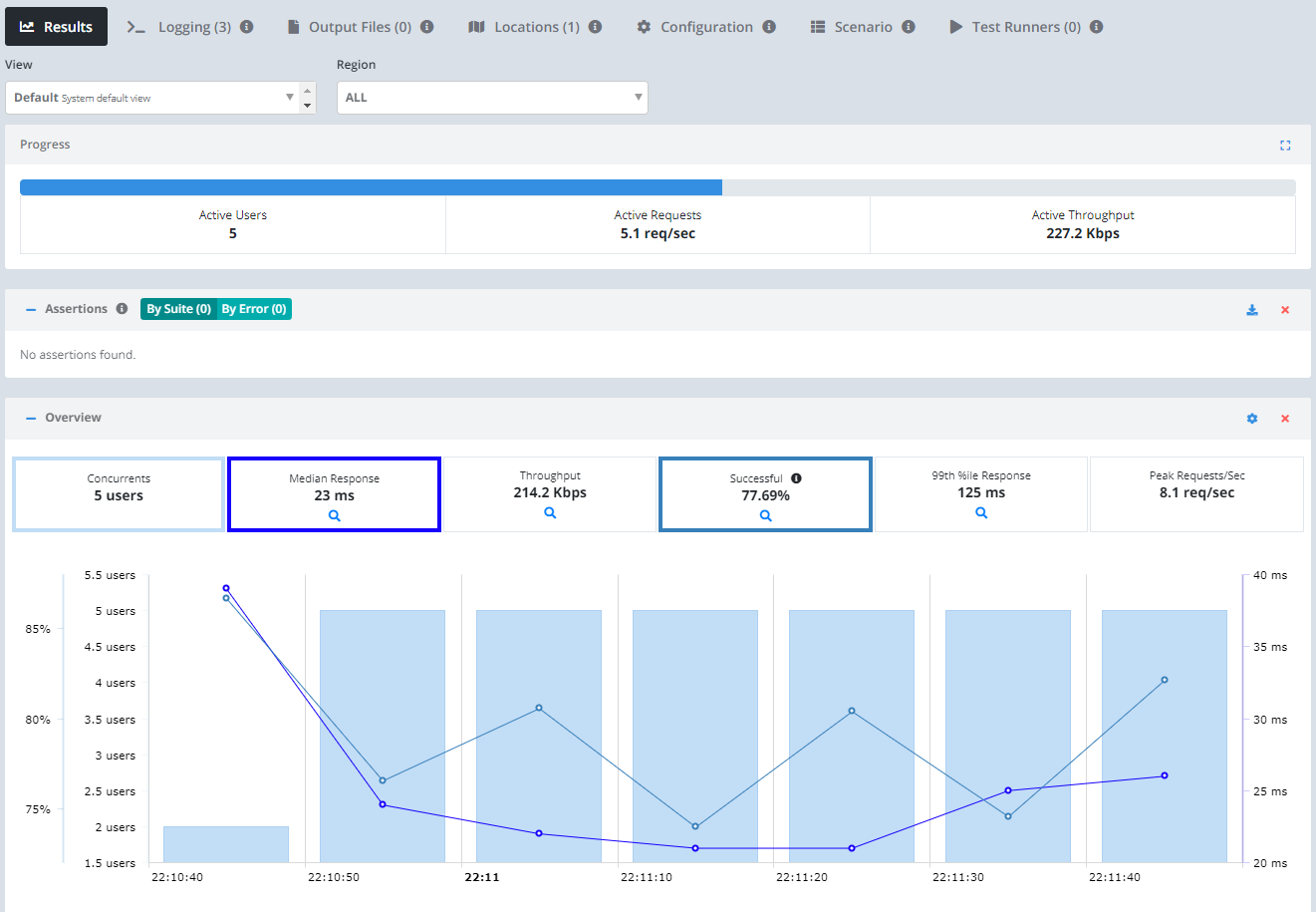

Once the test starts executing, Testable will distribute the virtual users out to the selected test runners (e.g. Public Shared Grid in AWS N. Virginia) to simulate.

In each region, the test runners execute 5 concurrent virtual users replaying our Postman Collection for 2 minute with a 10 seconds pause between iterations.

The results will include traces, performance metrics, logging, breakdown by URL, analysis, comparison against previous test runs, and more.

We also offer integration (Org Management -> Integration) with third party tools like New Relic. If you enable integration you can do more in depth analytics on your results there as well.

That’s it! Go ahead and try these same steps with your own scripts and feel free to contact us with any questions.