Self-Hosted Test Runner

- Introduction

- Prerequisites

- Windows/Mac

- Running

- Options

- Logging

- Execute a Test Case In Your Region

- Upgrading

- Available Images

- Certificate Authority

- Git Credentials

- FAQ

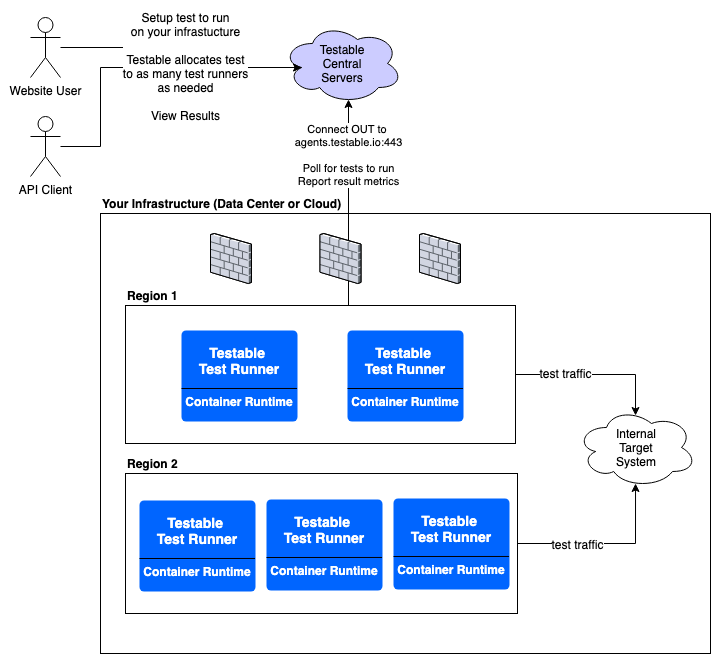

Introduction

Testable test runners are responsible for executing test cases and reporting back the results. Shared runners are provided in regions across the globe for your use. In certain cases it makes sense for users to have their own private runners. These situations include:

- Your product sits behind a firewall and is not reachable from outside that firewall.

- Your test requires IP address whitelisting. The global shared agents are dynamically allocated and removed so a fixed range of IPs cannot be guaranteed.

- Ensure complete isolation from other user’s test cases.

- Leverage spare capacity on your infrastructure.

Prerequisites

Install Docker Engine, Docker Compose, or Kubernetes

A container system must be installed first. Docker Engine is one such option as is Kubernetes. Instructions on how to install Docker can be found at https://docs.docker.com/engine/installation/.

Create an API Key

Login to Testable and go to Settings -> Api Key. Create a new API key. You will need this to run the agent.

The test runner will attempt to connect to https://agents.testable.io (port 443) to receive work and report on results. Make sure outbound network access is available from the host.

A quick way to check is to run: telnet agents.testable.io 443 and ensure it connects successfully.

Windows/Mac

The Docker based test runner is the preferred approach and supports all scenario types. We also have a Windows and Mac package you can install to run tests on those operating systems. The packaged runner only supports the following scenario types: Selenium, Playwright, Puppeteer, Live sessions, Node.js scripts, HAR replay, and Postman.

- Windows: See the Windows guide for installation instructions.

- Mac: See the Mac guide for installation instructions.

Running

Docker Engine

docker run -e AGENT_REGION_NAME=[region-name] -e AGENT_KEY=[api-key] -v /logs --privileged --security-opt seccomp:unconfined -d testable/runner:prod_latest

The region name can be anything you want. This name will appear in the Region multi-select when you create or update your test configuration (Locations => Self-Hosted => [region]). The AGENT_KEY is the API key you created earlier (Settings -> Api Keys).

Docker Compose

The equivalent docker-compose.yml if using Docker Compose:

version: "3.7"

services:

testrunners:

image: testable/runner:prod_latest

environment:

- AGENT_REGION_NAME=[region-name]

- AGENT_KEY=[api-key]

volumes:

- data:/logs

security_opt:

- seccomp:unconfined

privileged: true

volumes:

data: {}

Kubernetes Deployment

The equivalent Kubernetes deployment configuration file:

apiVersion: v1

kind: Deployment

metadata:

name: testable-testrunner

spec:

containers:

- name: testable-testrunner-ctr

image: testable/runner:prod_latest

env:

- name: AGENT_REGION_NAME

value: [region-name]

- name: AGENT_KEY

value: [api-key]

securityContext:

privileged: true

Options

Full set of accepted environment variables:

AGENT_KEY(required): Api key to authenticate your agent with (create api keys at Settings -> Api Keys). This can be a comma separated list of keys if you want to register your agent in multiple organizations.AGENT_REGION_NAME(required): The name of the region. If you provide a comma separated list forAGENT_KEYthen this needs to be a comma separated list of region names, one for each key.AGENT_SERVER_URL(required to connect to your own Testable Enterprise environment): The url of the coordinator service that this test runner should connect to. For Testable Cloud this defaults tohttps://agents.testable.io.AGENT_LOG_LEVEL: The logger level. Choices are (error, warn, info, verbose, debug). Defaults to info.AGENT_LOG_CONSOLE: Whether or not to log to the console. Defaults to false.AGENT_CONCURRENT_CLIENTS: Number of concurrent clients the agent can execute at any one time. Defaults to 99999999.AGENT_REGION_DESCRIPTION: The description that appears with the region name when configuring test cases. Defaults to the region name if not specified.AGENT_UPDATE_LAT_LONG: A boolean that indicates whether or not to update the location of your agent at runtime. Defaults to false. If true, Testable will geo locate your IP address. On the test results, your agent will appear at this location on the map.AGENT_LATITUDE: The latitude at which your agents are located. If set along with AGENT_LONGITUDE, your region’s location will be updated to these values and the geo location of your IP address ignored.AGENT_LONGITUDE: The longitude at which your agents are located. If set along with AGENT_LATITUDE, your region’s location will be updated to these values and the geo location of your IP address ignored.AGENT_BROWSER_CLEANUP_DURATION: This option will automatically remove browsers which are not used for certain period of time. Expected value can be days, months or year e.g.15d,2m,1y.AGENT_BROWSER_CLEANUP_DISK_USAGE: Whether to remove old browsers automatically if disk usage is over certain percentage. Provide number value e.g.90.PUBLIC_HOSTNAME: Optionally specify the hostname of the server running the docker container. This is important if you run multiple test runners on one server so that Testable does not count available memory/cpu multiple times.HTTP_PROXYorHTTPS_PROXY: If you are behind a corporate proxy set this to the URL for your proxy server. All calls to Testable will be routed via this proxy. Example formats:http://[host]:[port]orhttp://[username]:[password]@[host]:[port]. Note that this will not proxy network requests made by your test scripts during test executions. Please consult the documentation for the relevant tool (e.g. JMeter, Gatling, Locust, Node.js, etc) if you would like to also proxy those calls.AGENT_BROWSER_CLEANUP_DURATION: For browser based testing on long running test runners you may end up installing many browser versions over time. This setting tells Testable to cleanup old unused versions after a certain amount of time. Examples:1y(1 year),3m(3 months).AGENT_BROWSER_CLEANUP_DISK_USAGE: For browser based testing on long running test runners you may end up installing many browser versions over time. This setting tells Testable to cleanup old unused versions after a certain percentage of the available disk space is used up. Examples:90(cleanup after disk utilization exceeds 90%).AGENT_GIT_AUTH: Path to a git credentials file (see below for format). If your test scenarios utilize version control integration, you can provide credentials via a local file on the test runner. See the section below for more details.AGENT_USE_S3: Whether or not to download new browser versions directly from AWS S3 in N. Virginia or via the Testable Cloud endpoint. Set totrueby default except for windows self-hosted where it is set tofalse. If your network does not allow connections to S3 then set this tofalse.AGENT_FILES_ENABLED: Whether to capture output files from tests executed on this test runner. Any file written to the special OUTPUT_DIR directory are captured into the test results by default. Set to true by default. Can be set at the organization level as well via Settings => Test Runner Data.AGENT_SOURCE_CODE_ENABLED: Whether to capture the source code from the repository used to run your test into the test results as a zip file. Set to true by default. Can be set at the organization level as well via Settings => Test Runner Data.AGENT_TRACE_ENABLED: Whether to capture traces for all tests executed on this test runner. Set to true by default. Can be set at the organization level as well via Settings => Test Runner Data.AGENT_LOGGING_ENABLED: Whether to capture from processes launches as part of your test (e.g. Selenium, Puppeteer, JMeter, etc) for all tests executed on this test runner. Set to true by default. Can be set at the organization level as well via Settings => Test Runner Data.AGENT_TAG: A comma separated list of tags to associate with the test runner. When a user assigns a test to a region they can specify which tags any test runner assigned to run the test must have.AGENT_NPM_INSTALL_TIMEOUT_MS: For scenarios that require annpm install, this tells Testable how long to wait before considering the install as timed out. Defaults to900000milliseconds if not specified.

To apply resource constraints to the container (i.e. memory/cpu) see this Docker guide. Otherwise the test runner container can use as much of a given resource as the host’s kernel scheduler allows.

Logging

Logging is written to /logs/agent.log within the container. Mount the /logs volume if you would like to access the log from outside of Docker. 10 days worth of logs are kept in the container using a daily rolling strategy.

Execute a Test Case In Your Region

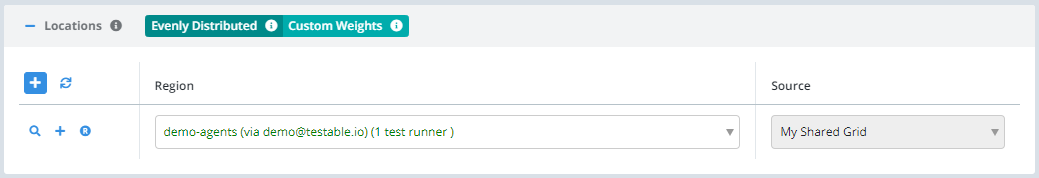

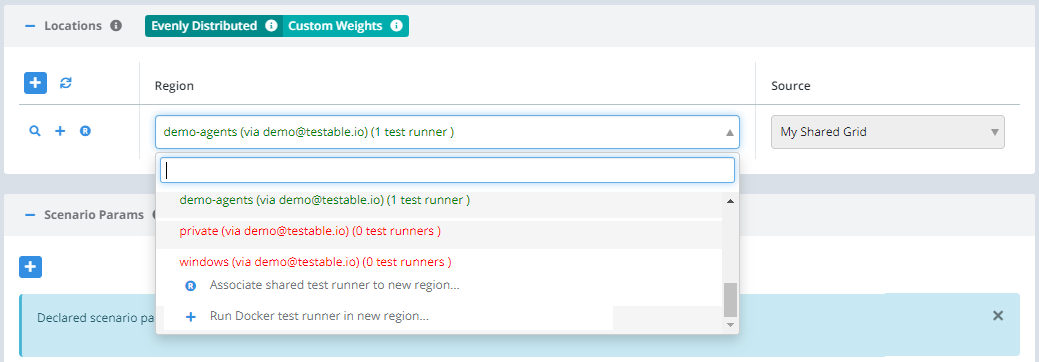

Create a new test case, and during the Configuration step you will see your region name in the Locations list. Select it and when your test starts it will execute on one of your test runners.

Note that you can have multiple regions of your own each with multiple test runners.

Upgrading

To upgrade to the latest version of the test runner software:

docker pull testable/runner:prod_latest

Available Images

The following images are available depending on your use case. Check out the readme for each image on Docker Hub (https://hub.docker.com/r/testable) for more details.

testable/runner:full_prod_latest: Standard Testable image with all version of all tools we support.testable/runner:slim_prod_latest: Has the latest version of each tool we support installed (e.g. JMeter, Gatling, Locust, Selenium, etc).testable/runner-[node|java|jmeter|gatling|locust|selenium|phantomjs|playwright|puppeteer]:prod_latest: Scenario type specific images that only contain the necessary libraries to run that specific tool with Testable. Each image has various version specific tags, but each one hasprod_latestwhich refers to the latest available production ready build.

Certificate Authority

There are two reasons to be considered about CAs:

- If the websites and APIs you plan to test are available over TLS using a corporate certificate authority (CA) then you will need to make sure the test runner is aware of your CA.

- Your corporate proxy injects its own certificate chain for HTTPS requests. In this case the connection back to Testable servers will fail until you make the container aware of the proxy CA certificates in the chain.

How to do this depends on the scenario type and whether it is run via Node.js, the Java Virtual Machine, or Python.

For Kubernetes deployments, see our Kubernetes guide.

Git Credentials

For test scenarios that utilize a version control link you would typically provide the credentials (either access token or SSH) via the Testable web application.

These are then stored encryrpted on Testable Cloud (using your own encryption key for enterprise customers) and provided to the test runner when it is assigned the test to run. The test runner then clones the repository using those credentials.

You can also provide these credentials via a local file on the test runner instead. In this case all Git links would be set up with “anonymous” authentication via the Testable web application.

The format of this local file:

# access token auth

[repo-url]|[username]|[token]

# private key auth

[repo-url]|[local-path-to-ssh-key]

# access token example

https://github.com/testable/wdio-testable-example|myuser|xxxxxxxxxxxxx

# private key example

git@github.com:testable/wdio-testable-example.git|/path/to/key.pem

This file would then be provided via environment variable AGENT_GIT_AUTH=/path/to/authfile that points to a valid file path. For Docker based test runners this path would also need to be mounted as a volume that connects it to a local file on the file system.

Testable Server Connection + Node.js based scenarios: Node.js Script, Puppeteer, Playwright, Webdriver.io, Protractor, Postman

The process within the container that connects back to Testable servers (for Testable Cloud this is https://agents.testable.io) runs with Node.js. If your company uses a proxy that injects its own certificates you need to follow the directions in this section.

Node.js expects a PEM formatted file consisting of one or more trusted certificates that is then set as the NODE_EXTRA_CA_CERTS environment variable.

docker run .... -v /local/path/to/ca.pem:/home/testable/ca.pem -e NODE_EXTRA_CA_CERTS=/home/testable/ca.pem ....

JVM based scenarios: JMeter, Gatling, Java, Selenium Java, Serenity

You will need to provide your cacerts file and mount it in each JVM version Testable supports or you plan to use.

docker run .... -v /local/path/to/cacerts:/usr/lib/jvm/jdk-1.8.0/jre/lib/security/cacerts -v /local/path/to/cacerts:/usr/lib/jvm/jdk-11.0.5/jre/lib/security/cacerts -v /local/path/to/cacerts:/usr/lib/jvm/jdk-17.0.3/jre/lib/security/cacerts ....

Python based scenarios: Locust

Python also expects a PEM formatted file consisting of one or more trusted certificates that is then set as the REQUESTS_CA_BUNDLE environment variable.

docker run .... -v /local/path/to/ca.pem:/home/testable/ca.pem -e REQUESTS_CA_BUNDLE=/home/testable/ca.pem ....

FAQ

I don’t see my region in the list?

Try reloading the Testable page and it should appear as a region to select for your test configuration. If it still doesn’t appear, check the outbound network access to agents.testable.io.

My tests keep crashing when run on my test runner.

If you set a -m or --memory option with docker run it is possible you have assigned the container a limited amount of memory that is insufficient to run your test. Use docker inspect [container-id] to check the memory settings of your container.

I use Selenium Webdriver.io + Chrome and the browser is crashing.

There are two potential issues here:

1. Shared Memory

Chrome relies heavily on shared memory and Docker by default does not always provide much shared memory to the container.

To check this issue first run ipcs -lm on the instance. Check the output for max total shared memory (kbytes).

Next run the same command on your Docker container: docker exec [container-id] ipcs -lm and observe the output for max total shared memory (kbytes). If the shared memory in your container is lower this is likely an issue.

Recent version of docker support a setting for shared memory: --shm-size 4G. If that does not work you can try mapping shared memory as a volume: -v /dev/shm:/dev/shm or -v /run/shm:/dev/shm depending on the location of shared memory on the host. Run mount -t tmpfs to find out the location of shared memory on your host looking for tmpfs on /XXX/shm type tmpfs (rw,nosuid,nodev) in the output. /XXX/shm would be the shared memory location on your host.

2. Docker security settings

On some Linux installations (e.g. Ubuntu 22.04) the default security settings for your docker container are not sufficient for Chrome to launch.

We can fix this by ensuring our docker run command includes --security-opt seccomp:unconfined. Check out this for a more restricted security profile that should be sufficient for Chrome.

Has your container image passed an independent security scan?

Yes. Our test runner image is scanned by Docker Hub’s security scanning service on every release.

What data is transmitted back to Testable Cloud?

Test runners transmit several types of data back to Testable Cloud.

-

Test Runner Telemetry Data (regularly): Every few seconds the test runner collects and sends back the memory utilization, CPU utilization, network utilization, and number of active or established TCP connections on the test runner. Only the last 10 observed values are kept when no tests are actively running on the test runner. While a test is running this data is associated with the test results and is kept until the test results are deleted or cleaned up due to retention rules.

-

Test Related Data (during test execution): While a test is running we collect several types of data that is then sent back to Testable Cloud. This data is retained until the test results are deleted or cleaned up due to retention rules.

- Metrics: A variety of metrics are captured for every network request your test makes. Read more about the metrics we capture here. Any custom metrics are transmitted back as well.

- Traces: A trace includes the full details of a network request including the request headers, request body, response headers, and response body. The test runner intelligently samples network requests to capture a trace for a small percentage of the requests your test makes based on URL and response code. Traces can be disabled for all tests run on this test runner using

AGENT_TRACE_ENABLED=falseor per test via the test configuration advanced options. - Output Files: Any files your test writes to the directory specified via the

OUTPUT_DIRenvironment variable are also transmitted back. It’s up to your specific test to decide whether to write any files, screenshots, etc to this directory. - Live Video: For browser based tests only, you can enable a live video recording for a sampling of virtual user iterations. This is controlled via a scenario setting.